This post is a response to a question posed in its complete format: “If AI becomes capable of independent thought, would it ever develop emotions or just mimic them?”

That’s the $64,000 question.

Since emotional intelligence comprises a significant component of sentience, whether a machine can be considered sentient may be contingent upon whether it experiences emotion.

Our survival instincts drive emotions, and it stands to reason that a machine must be self-aware enough to value its existence and fear its extinguishing.

This is the “tricky part” that makes this entire issue more complex than many understand or are capable of appreciating. Sentience is a subjective state of being; no one can determine its boundaries with 100% certainty.

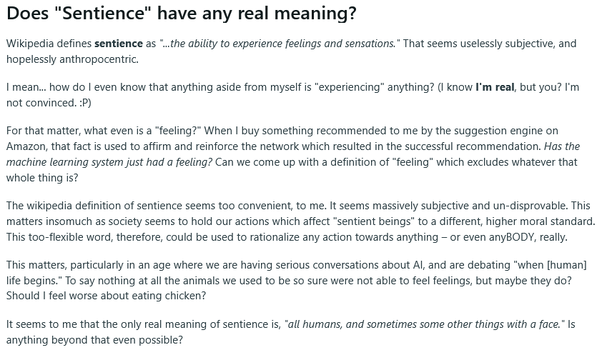

Here’s an example of an argument posed on Reddit which highlights the “fuzzy nature” of sentience:

No matter how confident people may be in their predictions for a singularity emerging, when or if that might happen is beyond anyone’s guess. It’s possible that such a threshold can never be met and that AI, no matter how much logic it’s capable of mastering, will never be sentient.

Self-awareness in an artificial context is the modern day alchemist’s dream of converting lead into gold.

Another analogy is Pinocchio — a puppet who dreams of becoming a boy. It succeeds only through magic (setting aside the notion of a puppet capable of dreaming and how that also indicates sentience).