This post is a response to a question posed in its complete format: “Do you think the late 21st century will be different from the early 21st Century just like the early and late 20th Century are nothing alike?”

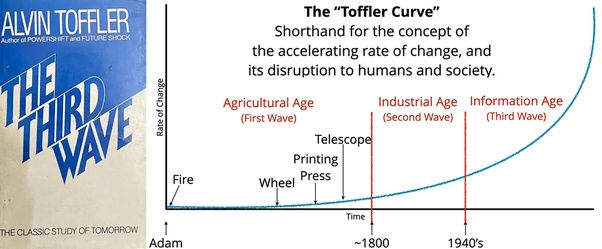

The rate of change has been steadily increasing. We (the public at large) have been made aware of this increasing rate of change since Alvin Toffler’s Future Shock was published in 1970.

Re-reading Future Shock, 50 years on

“Western societies for the past 300 years have been caught up in a firestorm of change. This storm, far from abating, now appears to be gathering force.” (p.18)

Future Shock Complete Film on YouTube (1:53:13)

“Future shock is the dizzying disorientation brought on by the premature arrival of the future… [It] is a time phenomenon, a product of the greatly accelerated change in society.” (pp.19-20)

The degree of change between the two centuries will be far more pronounced at the end of this century than the changes that occurred throughout the previous century and all preceding centuries.

Most answers focus on technological change, but this is the most apparent change because many can still remember an analogue age in which telephone communication involved an electronic umbilical cord and displays were limited to televisions and equipped with oddities called “rabbit ears.”

OMG! You had to get up from your seat and move a few feet before turning a dial to watch something different. We have demanded a remote controller for almost every electronic device since enduring that torturous existence. Now, we’re drowning in remotes we can’t find when we need them, while they demand an additional expenditure of precious dollars to feed them energy from disposable batteries.

Technological change alone represents multiple dramatic transformations of human society

and in how we will live from day to day. Today’s world of work will appear both alien and punitive to a world of work that will more closely resemble pre-industrial human society, according to Toffler’s third future-prediction book, “Third Wave.”

Technological change expands the possibilities of what can be considered human and redefines humanity itself. We can already see a massively transformative future for human biology through expanded medical and healthcare solutions to physiological needs and the emergence of a transhumanist movement that emphasizes the benefits of technological augmentation. While we remain cautious about biological alterations and focus on non-invasive technologies, medical solutions to limb loss, for example, are increasingly human-like in function while superior to their biological counterparts.

Like tattoos, artificial enhancements have been considered social taboos (for a short period, under the influence of Victorian sensibilities governing socially acceptable norms); however, they can conceivably become a popular means of “touching pseudo-immortality” and achieve small degrees of “super-humanity.” Genetic modifications will expand beyond preventing the transmission of genetic diseases to include prenatal selection of traits for one’s children. This will occur despite moral outrage because those with means will seek the greatest advantages they can for their lineages.

Technological change, however, is not the most radical change we are currently undergoing. Technology, however, has inspired, enabled, fueled, and empowered the most radical changes to date: ourselves.

We, as humans, are dramatically transforming, through growing pains demanded by our need to build a cooperative world in which cultures that once existed in isolation must now become interdependent to survive. Human psychology is being fundamentally restructured globally, in a way consistent with nature’s demand that we adapt or die.

Old forms of thinking and social organization cannot survive this transition without severely curtailing our social evolution, and they are trying to do precisely that. The MAGA sensibility and its adherence to a fictional nostalgia where familiar power structures continue to wreak havoc on outsiders is unsustainable in a global community that thrives on diversity.

We must learn to communicate and cooperate through mutual respect, and that’s why so much is so messy today. We haven’t grown up. We’re still in grade school, where our leaders mock 12-year-old girls and their base ignores that as irrelevant.

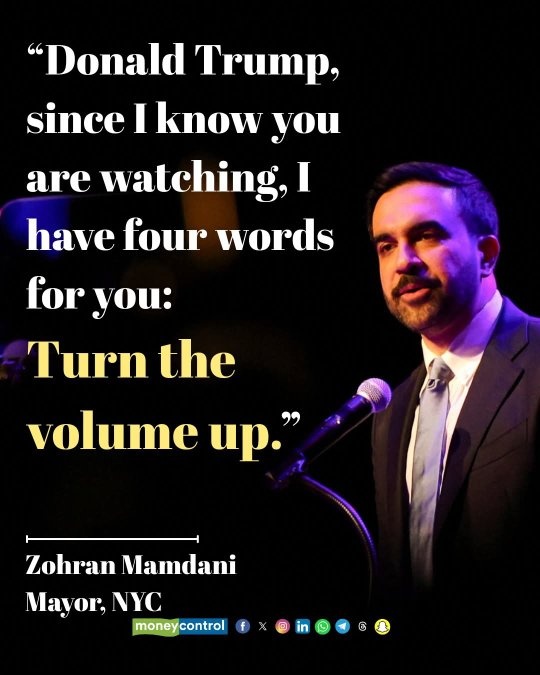

We are currently confronted with the sum of our human flaws and weaknesses, as well as with the social, economic, and psychological dysfunctions we have inherited from our forebears, through a focal point created by technology. Everything we once ignored and silently turned away from has become magnified and loud.

Each day that passes, the volume of discord increases as we negotiate new terms for the social contracts binding us all to a construct called “civilized society.”

“Millions of ordinary psychologically normal people will face an abrupt collision with the future.” (p.18)

We have become aware of the toxic effects of the remnants of decay left behind by our primitive ancestors. The drive for conquest, domination, and exploitation of the vulnerable in society has reached a fever pitch as dinosaur gatekeepers rail against the loss of their power while being confronted by the reality of their limits in their waning years.

We are undergoing massive power shifts and now hand-me-downs as new dynasties emerge, in which the powerful take what they want despite protestations, pleas, and persistent reminders of the values of a world of equally free people, not kingdoms with serfs ruled by rulers who deny the people their needs to favour their luxuries.

The powerful take what they want because they can

And now the people are beginning to say, “No.”

We are increasingly aware that what we become is what we allow.

We have all seen this movie; while some of us seem to have slept through the Reality Onboarding Orientation Program (Introductory ROOP) to miss out on what’s going on in GongShow Reality Tunnel #42, which means we all get to enjoy the cataclysmic scenery together.

We are buffeted about in herds to feed on words, and mostly instructions, telling us how we must live.

At their behest.

Humanity is changing, and the cycles can repeat only so often until enough stop and say enough.

This ends here. This culture of casual cruelty ends now. Right here. Today.

We are human beings: we know we become chaos whenever bound or chained.

We embrace that because human society survives only when humans are equal.

[There is] a racing rate of change that makes reality seem, sometimes, like a kaleidoscope run wild.” (p.19)

Amplifying such voices by the many through the megaphones, the powerful seek to dominate

because they know how to run the show.

This dynamic ebb and flow of power in an endless game of take, take, take

will last only until it breaks.

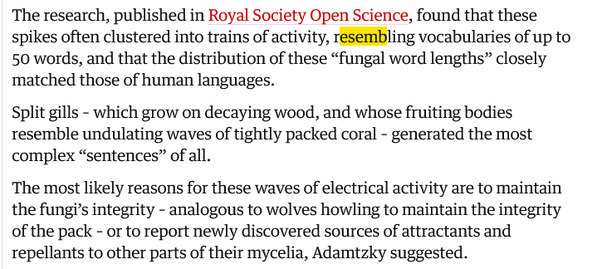

Meanwhile, numerous pressures are amplified by their instantaneity within a complex formula that quantifies interpersonal dynamics and produces opaque functions, algorithms, and equations.

To result in chaos.

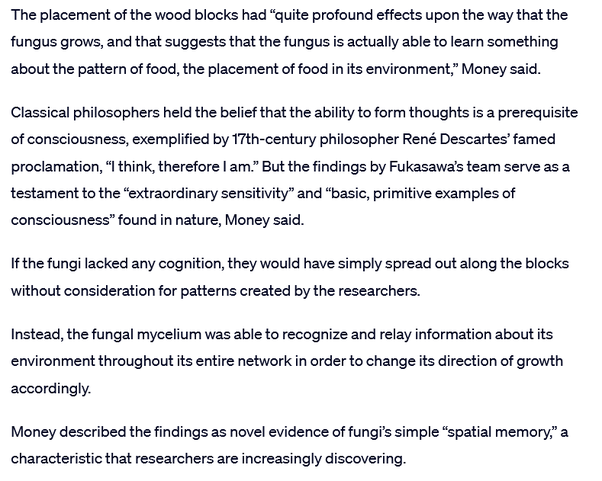

As it turns out, humans are not quantifiable

We never were

Humans have always been chaos

Automation through AI and robotics that can provide for every socially practical human need

dispenses with work altogether, while consolidated powers ignore how their consumptions

are destructive to our weather, but we are told that we must be bold

As they raid our home of all its gold.

Conditions are ripe for a massive reset for how we live and how we think about living.

What can we do?

Future Shock was an attempt to quantify chaos 50 years ago. Today, its envisioning of the future appears as quaint as the original Star Trek.

We don’t know what surprises are in store that could set us on a trajectory in any direction.

We do know that we stand at a crossroads today to determine a fundamental,

not cosmetic alteration of human life and society as we know it.

That’s a guarantee.

The transformation ahead is far more significant for tomorrow

than the Industrial Age was for today.

Tomorrow is as unimaginable as today will be tomorrow.

“Once emptied, the future can be filled with anything, with unlimited interests, desires, projections, values, beliefs, ethical concerns, business ventures, political ambitions…”